Human beings are constantly making sense of unstructured data. Now neural networks can be trained to not only learn from humans but surpass the human ability to understand critical data and leverage it to derive actionable insights.

Look at the above image. What do you see? It’s simply a newspaper cartoon. You and I are effortlessly good at recognizing what we see.

We can also spot the fag end of a long, grey tail and run out of the room screaming rat! Or we can see a leg and know whether it’s a person or a table.

Computers, on the other hand, have long struggled with acquiring this basic, almost trivial human ability. They need image labels to declare what a dog looks like and then learn to identify it by going through thousands of confusing dog pictures where unhelpful owners dress them in flashy outfits.

This is because data is conventionally processed in a structured form:Rows and columns of neat numbers in an Excel Sheet which are easy to make sense of. One can quickly search in a database represented by numbers and process it through traditional models. But when it comes to unstructured data, it’s not remotely as easy.

Unstructured Data: What’s The Big Deal?

Almost 80% of the data that businesses produce — from audio files, images, videos — is unstructured and hence, it remains underutilized. Instead, we tinker with structured data lakes — like accounting information — by using cloud computing.

But unstructured data like customer feedback or a product photo associated with an order can deliver big insights. What if it could be easily processed not just through traditional models or cloud computing but on the edge (read: locally) by using the device’s computational power?

Advances in machine learning and computing power can actually make it happen. Artificial Intelligence’s ability — aided by neural networks — to handle unstructured data is a long-awaited disruption.

Here’s how:

Intelligent Insights In Satellite Data

Take satellite data, for instance. It can track displaced refugees to deliver aid; it can determine economic activity by counting cars; it can monitor air quality. But say, we only manage to acquire a limited number of images or a video that carries this huge volume of information. The AI and machine learning rule book says we need multiple such files to train the neural network but the available data is sparse. Thankfully, GANs can mimic any distribution of data. They can be taught to replicate videos or images and their output is freakishly similar to the original.

The next problem is identifying what’s in the images. Current satellite photographs are manually labelled. Experts painstakingly identify regions, tag them and label them. But deep learning approaches such as Region-based Convolutional Neural Network (R-CNN) can automate the whole process.

They divide the image into various regions and identify the objects in them. This sounds familiar because most of us have seen how Google divides street images, for instance, and asks us to identify traffic lights to prove that we’re humans. The neural network is simply learning through our selection.

The R-CNN algorithm is similarly trained to identify objects — anything from a fisher’s ship to a lighthouse or a tree — in a satellite image. A leap from the slow process of manual identification and tagging, isn’t it?

No, because technology is advancing rapidly. An advancement called YOLO, You Only Look Once, ensures that instead of dividing an image into regions and trying to identify objects in each region, it can simultaneously look, tag and classify — all at the same time.

Clearly, it’s not just humans who can make sense of unstructured data anymore.

Speech Recognition

Speech is another example of unstructured data that has seen spectacular progress. Human speech is inherently ambiguous and yet, we’ve managed to achieve end-to-end speech recognition and brought Siris and Alexas into our lives.

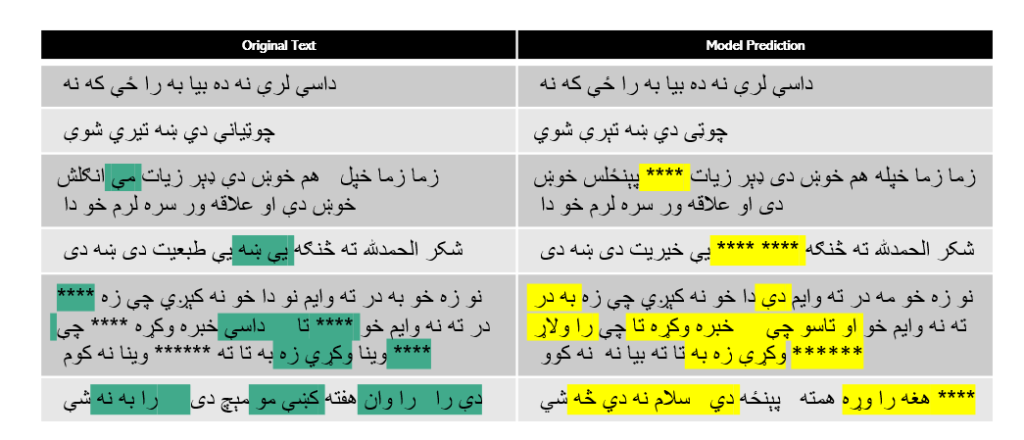

However, automatic speech recognition and transcription is easier when it is used for widely spoken languages such as English. When it comes to languages such as Pashto or say, Kongo, where the volume of data available for training is limited, recognition and transcription becomes a challenge. But tools like KALDI and Esp-net are coming in handy and helping us comprehend the limited speech data, literally.

HealthCare

Let’s take healthcare: We previously discussed how chronic inflammation can be a measure for wellness but in order to measure chronic inflammation, we need a lot of data. Even if doctors had access to it through bio-impedance; wearables that measured heart rate variability; or images of tongues, eyes, or nails, they would still need to call a battery of tests before correlating it to chronic inflammation.

Artificial intelligence can bridge that yawning gap. Deep neural network can be trained to process patient data from multiple units: point data, time series data, and images, to draw life-changing insights that would have been previously impossible. Going forward, these insights can not only be data-driven but also work as brainstorming triggers to help us solve complex problems creatively.

The folks at Myelin Foundry are employing multiple tactics to make sense of unstructured data. “We love complexity”, Gopichand Katragadda, the Founder and CEO, says. “We thrive in our ability to gain insights from unstructured data. While labeling reduces complexity, useful information is also lost in the process. Our differentiation is to provide content-aware insights at the edge, protecting data privacy and data security. “

On the Edge: Enabling Security and Privacy

We’ve established that neural networks need data to acquire the human abilities of easy identification and distinction. But acquiring large datasets often thwarts users’ privacy.

What if the unstructured data could be computed locally to draw inferences? On your existing hardware, and not by syncing it to the cloud. That’s where edge comes in.

Smartphones and computers today have tremendous computational power that remains under-utilized. If a user wants to keep their data private and prevent it from being shared with the cloud, an edge device can process it locally (or on the device) and make it happen.

It assures users the privacy that would be impossible if the data were shared with the cloud. Along with privacy, imagine how easily crucial healthcare data could be processed locally to provide actionable insights in far-flung towns and rural areas with limited access and connectivity.

If the computational power of the smartphone and TV is used, it could also change the world of entertainment. We could use super-resolution instead of a tiresome constant back-and-forth with the cloud, and drastically reduce buffering and improve users’ quality of experience.

The benefits are numerous. From healthcare, national security, to speech recognition, unstructured data on the edge is at the heart of many developments. And it’s quickly becoming obvious that the evolving AI technology and neural networks can help unlock its vast, untapped potential to learn from and aid, not replace, human work.